Since the antediluvian 486 of Intel that caches are in the CPUs of our PCs. We have seen them of different capacities, organizations and capacities and they are key to the good performance of a contemporary processor, to the point where they are an indispensable piece within them. But these come in small quantities, which leads us to wonder: what is it that limits manufacturers from placing a larger cache size on their processors?

If you look at the high-performance CPU market then you will see IBM’s range of POWER processors that for years have used massive amounts of DRAM as a cache, such as the POWER9 with 120MB of L3 cache.

But the recent launch of the RX 6000 GPUs with their 128 MB Cache memory has made us wonder what the limitation comes when it comes to placing large amounts of memory as caches within the processors.

First limitation on cache size: space

The most obvious limitation has to do with the available space on the chip, the cache, like another component, occupies space on the chip and not only the cache but also the interfaces to it and the coherence mechanisms that make it contains the appropriate information at all times.

That is why, despite the fact that in each manufacturing node that appears, it is the SRAM that decreases its size the most, the cache does not do so because it brings with it additional logical hardware that occupies an important space in the processor.

Second limitation to cache size: speed

Cache is much faster than RAM so it can respond to requests for data much faster, but it has a number of issues that affect performance:

- If a data is not in a cache level then a miss cache is produced which results in several clock cycles without the processor doing nothing, the best way to avoid this is to increase the size of the caches.

- The search time in all caches including miss caches cannot be equal to or higher than the time it would take for the processor to ask RAM.

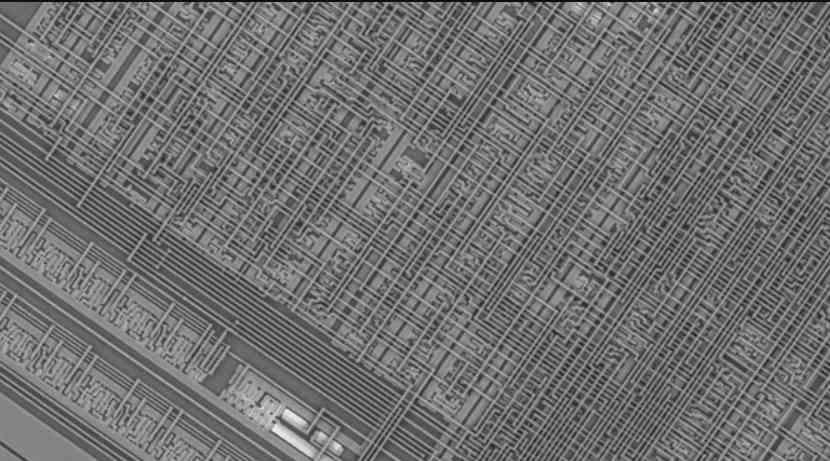

The CPU is not the one looking for the data in the cache, but this process is done by the cache controller, if the cache is too large then the cache controller will take much longer to find the data . In multicore systems a private cache is maintained with its cache controller for each very small core and they are very easy to navigate. The idea is that if a cache is too large it adds additional clock cycles and that is detrimental to performance.

Solutions to the cache size problem

We have chosen the ones that are clearer that we are going to see in the future in order to increase the storage capacity of the caches, they are not the only existing solutions, but the most viable that we end up seeing implemented in future processors.

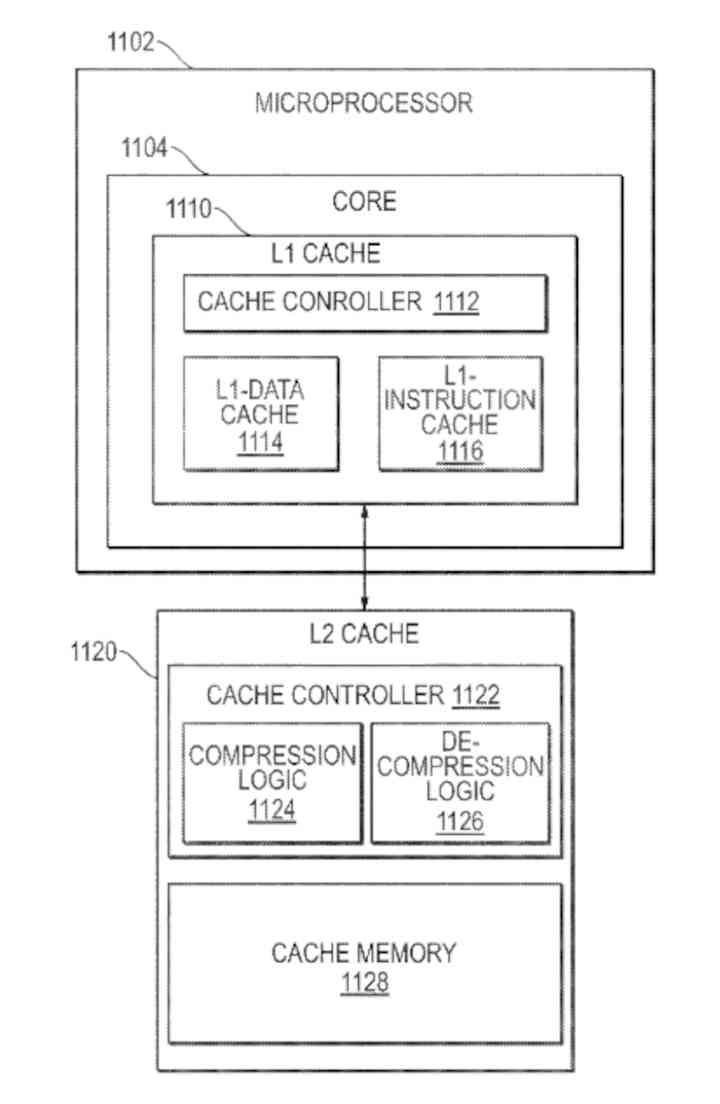

Compression of cached data to gain capacity

One of the solutions regarding cache size is cache compression , that is, the data included in the cache is compressed and served to the processor through a hardware data decompression unit, which means that at the capacity level, the cache memory could store more data inside it without increasing its physical size.

The trade-off of a compression-decompression mechanism is that this adds extra time to the entire data search process, so it only makes sense if you avoid a miss cache and allow you to find the data you are looking for in the cache instead of have to go to RAM to find it.

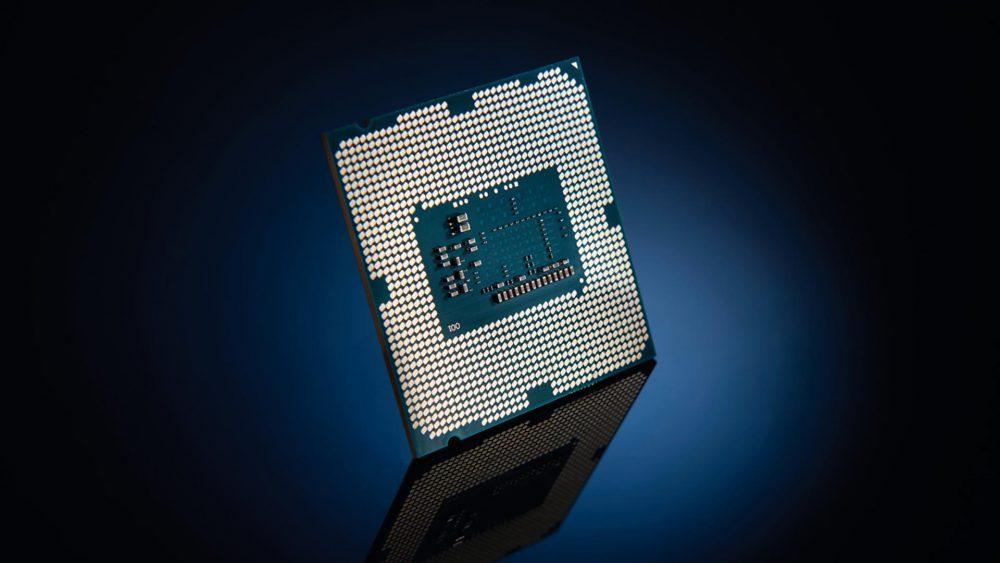

Mount the cache not inside but on top of the processor

Another solution to the cache size “problem” is to place the last-level cache on top of the processor . The idea is to make use of TSV technology, in order to have a SRAM memory chip almost as big as the processor and with a large storage capacity.

The counterpart to this is that two stacked chips generate a high temperature and avoid the use of high clock speeds , so the final performance of the processor can be affected compared to a traditional configuration.