Scaling at the chip manufacturing nodes is necessary for technology to advance, especially in terms of efficiency, but at the same time manufacturing difficulty increases and problems increase . In this article, we’re going to take a look at these issues, which are basically the ones that are preventing faster progress in shrinking fabrication nodes.

João Geada, Head of Engineering at Ansys, recently gave an interview to discuss the production problems that are generated by reducing lithography in the manufacturing nodes, and in this article we are going to offer you his point of view regarding these .

Moore’s law in manufacturing nodes

The first transistor was made in the late 1960s, and Intel‘s first ICs did not arrive until 1970. Since then, the number of transistors in chips has been doubling every 18 months, as the Moore’s Law, but we are at a point where they are already encountering enough problems to be able to continue fulfilling this premise.

According to Geada, they have been resorting to “tricks” for a long time to keep this law alive, but they still have many more up their sleeve to continue doing so. Most foundries already have some kind of plan to get to 3nm and even smaller fabrication nodes, although the numbers don’t really mean much these days – they’re just an approximation of the density that can be achieved.

Heterogeneous Manufacturing Nodes – Are They the Future?

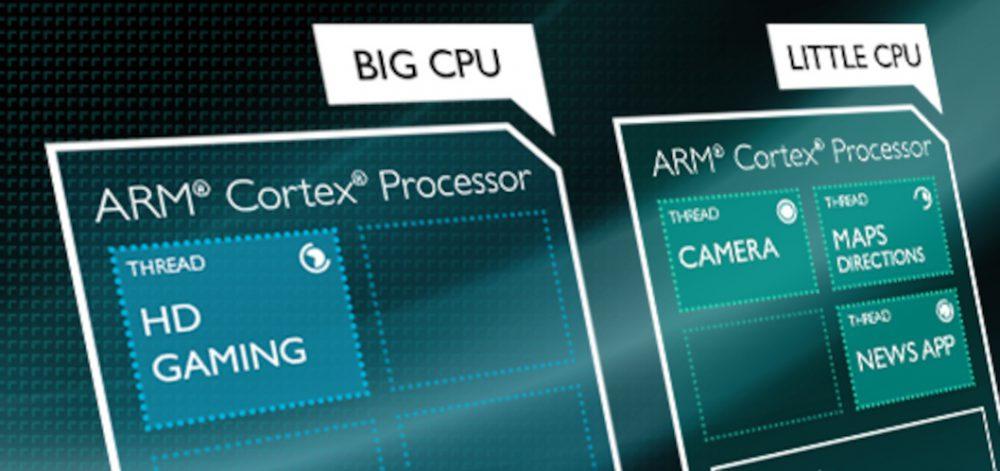

In recent times it seems that all the major manufacturers are gearing their designs towards processors with heterogeneous architecture (“big” and “small” cores). In general, heterogeneity is making a comeback in multiple dimensions as you move towards smaller geometries at fabrication nodes, but this in turn makes it increasingly expensive to fabricate a wafer.

One of the things that was driving Moore’s Law is that the cost per transistor was going down, but today it has not only not gone down, it seems to have stalled. Therefore, integrating more transistors on a chip implies that the cost is much higher, something that seems to be driving this heterogeneity in the manufacture of chips since in this way it is not necessary to develop smaller nodes. In fact, sometimes it is not necessary to work with silicon for this, as using variants of gallium arsenide or silicon germanium give better results.

This means that the fact that manufacturers are now opting for heterogeneous processors has to do with manufacturing costs and the no need to use smaller lithographs, while taking advantage of the advantages that this type of architecture offers.

On the other hand, according to Geada, there will be specialized silicones to meet specific needs, such as the millimeter wave 5G that is now on everyone’s lips. The fact is, in his words, the high cost of a tiny litho node is not needed to meet many of the needs of today’s technology.

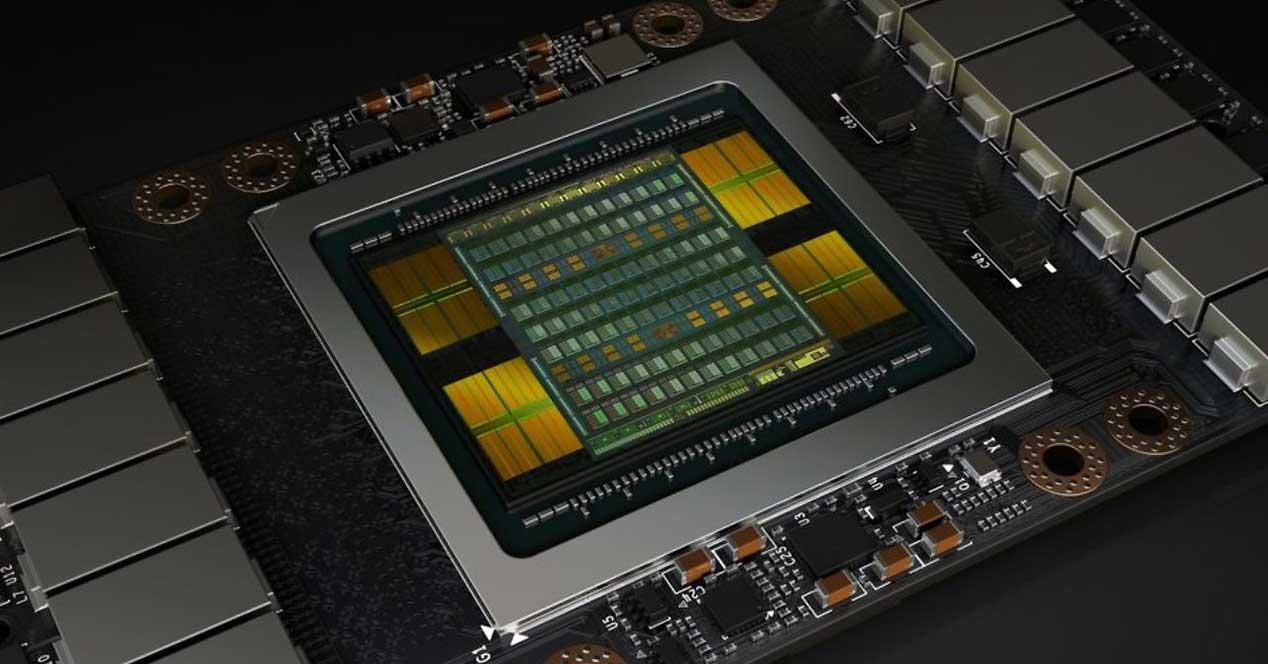

Multi-die and 3nm processors

Basically each subsystem specializes in the things it does well. Therefore, according to Geada, 3nm is ideal for the computational and power density required in modern processors today. A great deal of localized computing power can be built in using many, many transistors (the kind that this tiny node allows), but it is much more efficient if you create a processor with multiple dies, each specialized in one thing. Efficient, at least, in terms of cost.

For this reason, various manufacturers are devising systems that no longer just have a die, but are stacked for particular purposes. Now there is a whole ecosystem around high-bandwidth memory, which they are adapting because they can no longer afford to install it outside of the die itself, something that increases its latency.

Lost speed due to component distances has always been a concern for engineers. Performance, in many respects, is limited by physical size, and today it is becoming a headache because of the power and performance requirements of today’s systems that technology requires. Therefore, whenever possible, all this infrastructure will be migrated inwards, as close to the processor as possible. For this reason, much more complicated processors are beginning to be seen where everything is integrated in one through some type of 3D-IC technique.

And what about chiplets?

Chiplets have been talked about for a while as a solution to all current problems in reducing manufacturing nodes. According to Geada, we are not at that point yet because there is a lot to develop to make it feasible to use it in everyday products. In practice, simply placing more transistors in a smaller physical area has brought with it a number of unintended consequences.

One of them is the voltage drop caused by the exchange of information between two components. The more there are, the more pronounced this drop is, so incorporating more and more transistors and as the voltage is lower and lower, it forces us to use higher currents to compensate. And since power supplies are capable of supplying a finite amount of current, this can become a problem in the future.

As soon as this story was migrated to FinFET, it stopped making technical sense because the components were “choked” by a third factor, which is the effect of other parts of the system on internal local area switching. The problem remains, but now it is more difficult to analyze.

Integrating the memory in the processor, does it cause problems?

In order to reduce latency and improve efficiency, lately there has been a lot of tendency to bring the system memory as close as possible to the processor, to the point that potentially there is talk of integrating the processor into the memory and not the other way around. Since memory is quite sensitive to heat, this can potentially become a problem in modern manufacturing nodes.

According to Geada, engineers have to simulate all the physics of a component at the same time when they design it, and they need techniques with sufficient performance to analyze enough modes of operation and interactions to determine if the system will actually perform as expected. Definitely, there is a thermal effect and it must be analyzed, but not only because of the heat it generates, but because of the thermal stress as a result of it.