The rendering method generally used in video games is the so-called rasterization or painter’s algorithm . But with the arrival of Ray Tracing or Ray Tracing in real time we are approaching an era in which old limitations are completely overcome. With the arrival of AMD‘s RDNA 2 and new generation consoles, it is clear that we are not facing a passing fad and that is why we explain the advantages of this new way of rendering a scene.

The ray tracing that we are beginning to see in games is not a full implementation of the ray tracing used today in movies, as it would be too slow. Instead, it is a combination of rasterization techniques that have been used up to now in addition to Ray Tracing to solve certain problems that cannot be solved with rasterization.

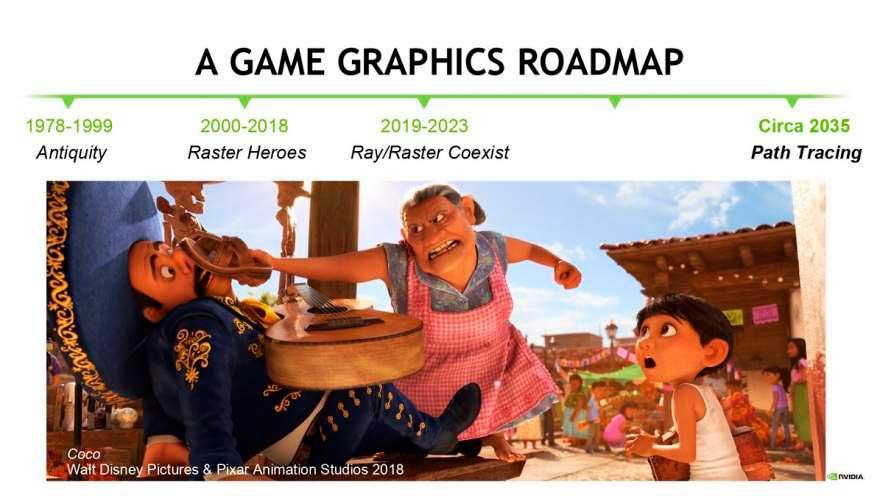

The idea is to progressively replace conventional rasterization techniques by Ray Tracing in a progressive and gradual way, but even NVIDIA, which is the company that has been promoting this technology the most in recent years in terms of marketing, has come to set a date for the beginning of the end of the transition from rasterization to Ray Tracing a by still distant 2023.

That is why during these years games will make use of what we call Hybrid Ray Tracing or Ray Tracing in real time, consisting of combining the rasterization used until now in games to render the scene together with Ray Tracing to solve certain visual problems that cannot be solved through rasterization.

This means that little by little the games will leave behind rasterization and the GPUs inside our graphics cards will gradually vary to that ideal.

Rasterized vs Ray Tracing

In rasterization, we update a color value of a pixel on the screen and then update that pixel again; this means that a single pixel has to be updated multiple times in a single scene.

On the other hand, with ray tracing, the color value of a pixel is not given until the ray has finished its entire trajectory, so the information in the image buffer will be updated only once.

This difference, which may be minor, is important, since rasterization, by continuously updating the value of the pixels in the image buffer, which is stored in the memory of the graphics, requires really large bandwidths to render scenes each more complex.

The hybrid ray tracing pipeline

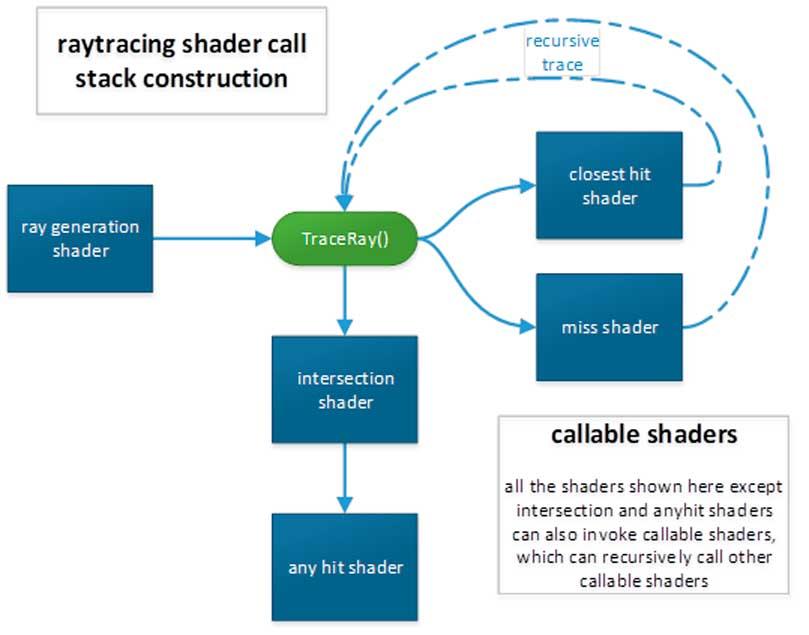

Regardless of whether we are using Vulkan, DirectX 12 Ultimate or any other type of API, the stages and the order of these are always the same.

- Ray Generation Shader: this shader has to be invoked every time we want an object in the scene to emit a type of ray.

- Intersection Shader: it is the shader that calculates the intersection between the rays and the object, deprecated due to the fact that intersection units such as NVIDIA’s RT Core perform this work in parallel and more efficiently.

- Resolution shaders: they are the type of shader program that is applied to the object depending on the result of the intersection, they are called miss shader, closest hit shader, hit shader, etc. They don’t all run at the same time because the intersection only gives one result.

Due to the lack of power of the GPUs that we use in our systems for Ray Tracing, what is done is a minimum use of it, limiting the amount of rays that make up the scene and their level of recursion.

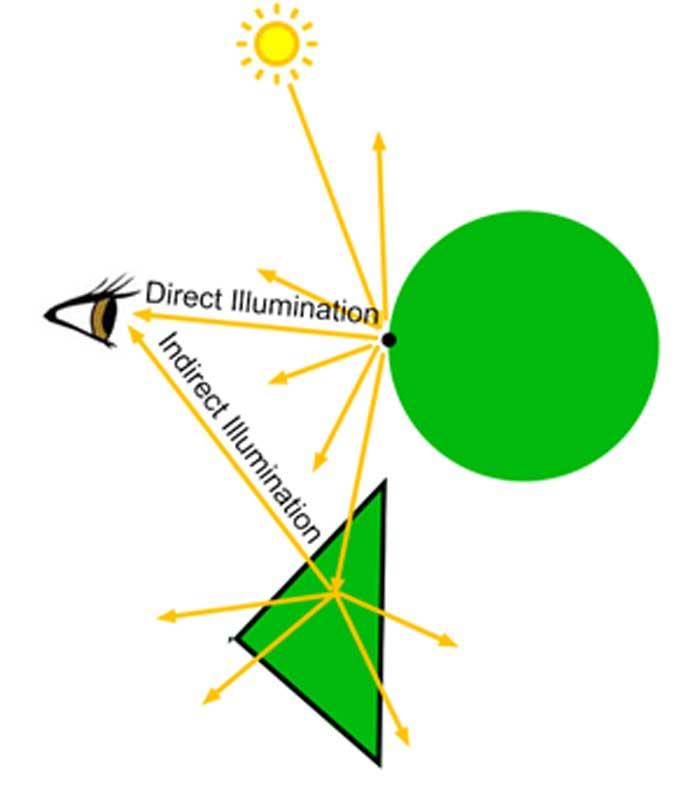

Indirect lighting, the great advantage of ray tracing

Despite continuous improvements in GPU power and programmability over the past two decades, all of them use the same algorithm to render scene scenes, called the rasterization algorithm.

This algorithm is good enough if we want to render scenes only with direct lighting, that is, with light sources incident on the objects but without these objects generating any type of light source by themselves in an indirect way, which is where it begins. to fall apart completely, as its lighting model is limited.

On the other hand, ray tracing deals with the path of light rays in the scene and takes into account its behavior and its trajectory.

” We understand as indirect lighting that which is produced by an object when a direct light source falls on it .”

The problem comes in that the way of rendering that the rasterization has does not take into account the path of the light, so when it comes to representing what the behavior of this is when it affects the objects, substitute methods have to be sought due to the lack information at the scene.

Shadow maps, an example of the limitations of rasterization

More than once, playing a video game will have made you want to take your eyes out because of the shadows in the game; The reason for this is that the GPU has to do extra work to get an idea of what the shadows will be like due to the lack of information.

What is done is to render the scene completely again but taking the object that generates the shadow as the camera of the new scene and only storing the depth buffer as a shadow map.

Can you imagine the power that would be required to calculate the shadows of all objects in a scene? Keep in mind that with rasterization the value of the pixels is continuously updated while with ray tracing this problem does not occur, since it is only updated once the ray has made its entire journey through the scene.

One of the things that are happening to be solved by ray tracing are shadow maps and not only these but also the reflections of objects, refraction, etc.

All these effects are beginning to be transferred to Ray Tracing due to the fact that rasterization cannot solve these visual problems with the same definition.

Another way that developers overcome the limitations of rasterization is by using maps of lights, shadows and / or static reflections. For example, what they do is place fixed shadows on the stage that have been pre-calculated in advance, and these shadows are not dynamic and do not change with the continuous change of lighting.

Another advantage: The representation of the materials

Another important element when representing a realistic 3D scene is the representation of the materials in which the behavior of the light also affects, and since the rasterization has a very limited model of the light this makes the objects in a 3D scene is not displayed as it should be.

It must be taken into account that each type of material is represented by a refraction quotient, which is the amount of light that they emit and that ranges from 0 to 1; the highest refractive quotient is that of a mirror-style surface that will bounce off full rays and the lowest is an object that simply absorbs all the light and does not refract it. When light rays bounce off objects they carry an energy level with them, and when this reaches zero the ray stops traveling the scene.

Every time a ray hits an object, in the case that we make the object emit an indirect ray product of the intersection through a ray generation shader, it comes out with an energy level equivalent to that of the ray that has hit previously on the object by the quotient of refraction of the object.

Well, one of the advantages of Ray Tracing is precisely that, the way in which it can represent different materials, increasing their visual quality and without having to pull complex shader programs that require a large amount of calculations on the part. of the GPU and combinations of texture maps that also do not achieve the same result.