In-Memory Computing is one of the paradigms that arises from placing the RAM very close to the processor , to the point of being placed in the same package or even within the chip itself. This paradigm is going to be very important in the future since many processors will be accelerated in speed, bringing the memory physically closer to the processor.

What is not in-memory computing?

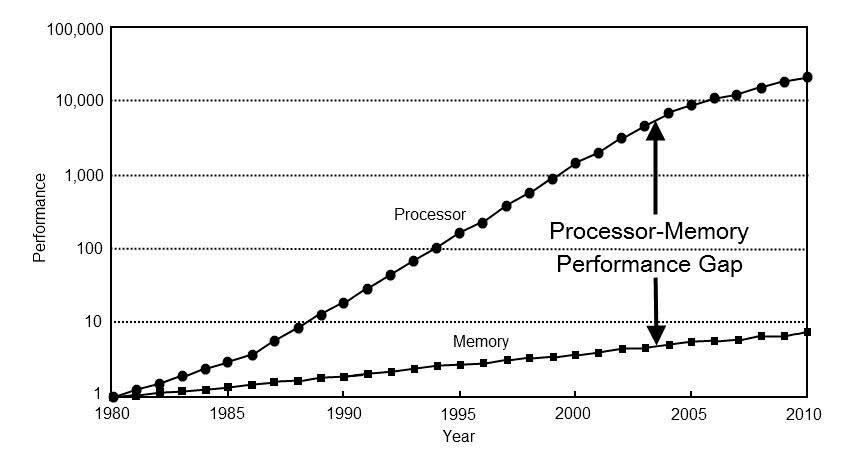

One of the universal problems when it comes to CPU performance is the distance from memory.

Over time, mechanisms have been used to alleviate this distance, such as the use of cache memory, but as this difference grows, it becomes extremely important to shorten it by bringing the memory closer to the processor, and this is where the concept of memory comes in. in-memory computing.

The concept is to bring the memory closer to the processor in such a way that the latency of the instructions, which is the number of clock cycles it takes a CPU to perform that instruction, is shortened in the process.

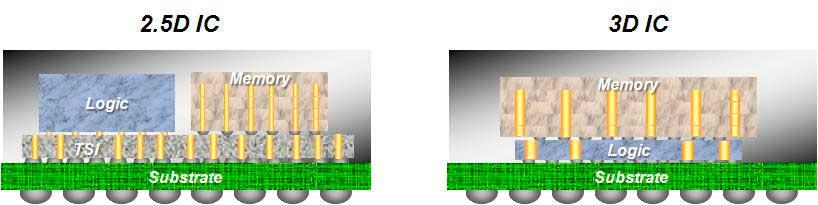

The arrival of 3DIC processors (which are usually processors with several cores and / or stacked memory) will allow very large memory densities within the processor, which will achieve that memory within the processor (or highly close to the processor) can be used as if it were RAM memory or as a part of RAM, but with high speed.

The other reason for this is the fact that the instructions that are executed in conventional RAM have an energy consumption that is an order of magnitude and sometimes up to two orders of magnitude compared to the data being in the cache. As a processor has a limited energy budget to function it is important that the instructions consume as little as possible in order to gain performance / consumption in their design.

In the current paradigm, the clock speed of a processor depends on the energy consumption of the most expensive instructions in that regard. The idea for the future is to take advantage of the memory being close to the processor so that certain instructions are carried out with a lower power consumption than now and allow dynamic clock speeds depending on the type of instruction. For example, lighter power instructions may run at higher clock speeds than others due to lower power consumption.

In other words, the CPUs of the future will not go at a fixed clock speed, but will fluctuate dynamically according to each instruction or the set of instructions that is executed at any given time.

Bandwidth improvements by integrating memory into the processor.

The memory interface with the RAM at the physical level is serial, this means that if you want a high bandwidth you have to make the controller larger and since the memory controller is located on the periphery of the processor this means increasing the chip size and thus its costs. The other option is to increase the clock speed, but this exponentially increases the overall power consumption between the RAM and the processor that uses it.

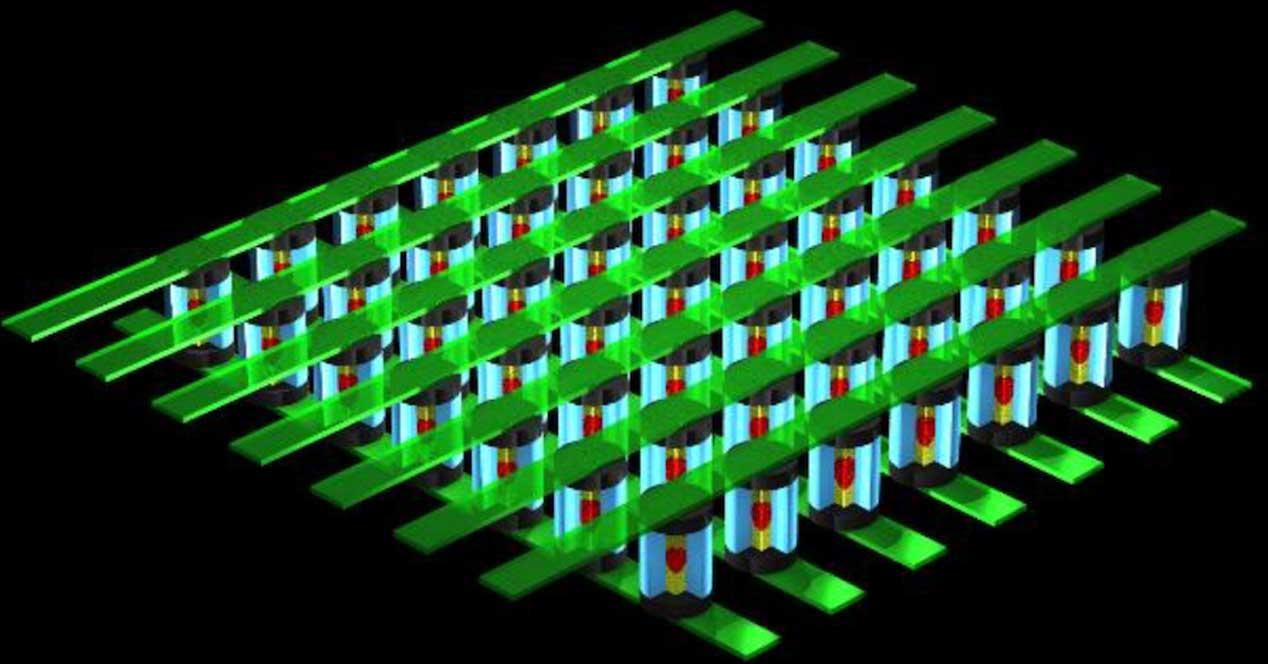

The idea of integrating the RAM in the same package or on the same chip is to be able to use wider memory interfaces, usually in matrix. For example, a 1024-bit bus would take up a very large space on the perimeter, but we can either connect the memory vertically to the processor or connect both separately to a common substrate.

This allows a 1024-bit interface, for example, to be converted to a 32 × 32 interface, occupying much less area. The idea of having a large number of pins for intercom means being able to achieve much higher clock speeds without having to pull very high clock speeds.

Keep in mind that, for example, having a 64-bit bus at 2 GHz is not going to consume the same as a 128-bit bus at 1 GHz, but twice as much, so it is important to be able to increase the number of interconnections with the memory.

The idea in computational RAM is to have much larger bandwidths than in conventional memory, at the same level almost as a cache, in such a way that instructions that depend on bandwidth on the one hand and those that depend on latency benefit equally from this paradigm.

The handicap for computational memory: temperature

The problem with adding a processor and memory together, to create what we call in-memory computing, is that they both generate a large amount of temperature together, so we run into the problem that despite decreasing the time per instruction in what the number of cycles is concerned. It can be limited in the clock speed in order to avoid reaching high temperatures in the process that fry the processor.

It is also the reason why it is an extremely expensive solution and we may not see it in the domestic markets. The fact that a processor with memory can achieve high clock speeds stably is a rarity and such processors may end up being so expensive that they are commercially unfeasible.

Actually, this is the reason why in the range of high-performance processors there is no talk of stacking memory on the same chip in the medium term, but there is talk of adding processors in memory, which is not the same than memory in processors. This concept is called Computational RAM or in-memory computing or in-memory processor.