AMD recently introduced the first home CPU with vertical cache, which consists of the use of 3DIC technology to connect a SRAM module on top of a Zen 3 CCD. What can vertical cache contribute to performance in CPUs, GPUs and others? of processors and components of a PC? This is what we will try to explain in this article.

The addition of the so-called “V-Cache” by AMD in some of the future AMD CPUs based on Zen 3 has brought with it the following question: Does it make sense to expand the cache of a CPU to stratospheric levels in order to gain performance? ? Obviously the idea of applying additional cache memory vertically is not something that AMD can do exclusively and the vertical interconnect systems between chips allow to connect a SRAM memory chip right on top of an APU, a CPU or a GPU without problems.

So what AMD has done in the background has nothing special, it is true that they have been pioneers in applying a 3D SRAM system in order to expand the total cache memory in the CCD Chiplets, it has to be Note that vertical SRAM as a cache will always be the last level cache or LLC of a processor and therefore its implementation will change depending on the type of processor we are talking about.

Vertical cache only at the last level

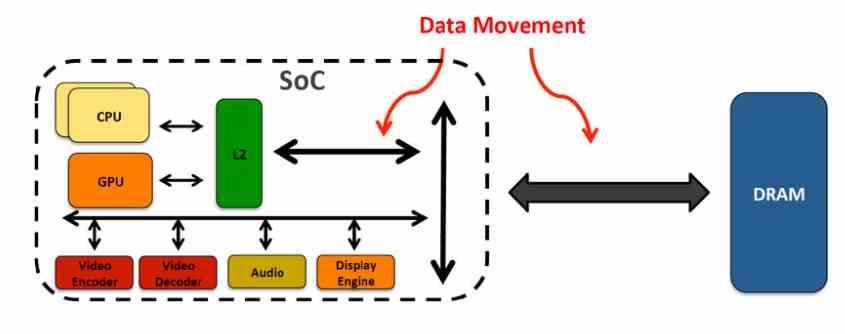

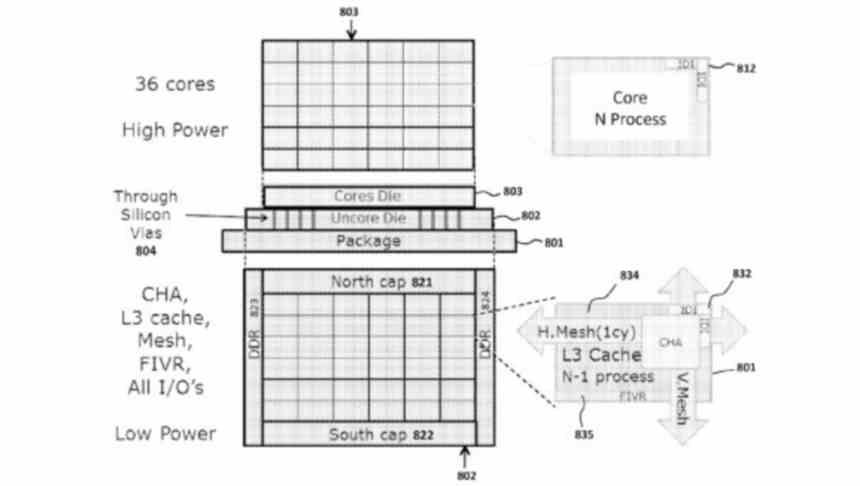

The reality is that today in general terms, all processors are SoCs, in the sense that they make use of several processing elements that can be homogeneous in the sense of using all the same cores or heterogeneous at the same time. time to have different types of cores. What they do have in common is the northbridge, which Intel calls “Uncore” and AMD calls “Data Fabric.” No matter what the name is, we are really talking about the same concept.

What does this have to do with vertical cache? Well, the vertical cache by its position on the chip in an SoC can never be placed before the memory controller in the hierarchy. In the Zen 3 CCD Chiplets there is no memory controller as it is located in the IOD, which is located in another chip, but in a hypothetical APU, the vertical cache would be located above the memory controller, although just earlier in the hierarchy than RAM, but after the higher cache level of the CPU or GPU.

Vertical cache instead of vertical memory

Under this premise, the ideal would be for the RAM to be as close to the processor as possible. How about putting full RAM on top of the processor in a 3D interface? It would not fit us at all due to the high capacity in tens of gigabytes that current RAM requires. But how about putting more and more floors of memory on top of the processor? After all, this can be done today.

It sounds like a great idea and it is possible to do it, but it ceases to be when we consider that with each new memory floor then the number of complete sets between the processor and the memory above them decreases. Then we can not forget the phenomenon of heat, each new floor forces to lower the clock speed of both the memory and the processor. Suddenly what sounded like a great idea is no longer so, we have in our hands a very expensive processor to manufacture, in few units and with a worse performance than with the elements separately.

So it is best to place a single floor on top of the processor, the memory does not have enough capacity to function as RAM today, but as a last-level cache.

Vertical cache performance on CPUs

The sole objective of the cache of every processor is to reduce the access time of the CPU to the RAM memory, but in recent times the transmission of data in the necessary quantities has become a huge energy cost is when architects have to look for the way to reduce the number of accesses in which the CPU or the GPU will make an access to its corresponding memory. Why a large top-level cache is important here.

The vertical cache therefore is not intended to increase the processing capacity of a CPU, but its performance. We have to understand that in a system we never achieve 100% performance and there are always losses, in the case of a processor an important part of the performance loss is in communication with the memory hierarchy, which includes caches.

Since the vertical cache is the last level, it will include all the data that the previous caches require and with its enormous size it will reduce the amount of processor access to RAM, but its efficiency will never be 100%, even with a Very large cache, the program may make a request to a memory address that has not been copied to the last level cache, forcing the processor to access the RAM assigned to it.

How is the performance of a cache measured?

When the CPU searches for a data or an instruction in the system cache hierarchy, it starts with those closest to the CPU itself, if it finds the data then this is what we call a “hit”, referring to what the CPU has targeted in the cache and hit the target. But if you can’t find the data at that cache level then that’s a miss and you need to look at the lower levels. We call this a “miss” in the sense that the CPU has not had any aim to find the necessary data and / or instruction, during that time the CPU has a stop or “stall”, since the CPU or GPU it has no data to process.

In the case of the vertical cache, since it would not be part of the first cache levels, then its existence cannot prevent the lower levels of the cache itself from being traversed. We have already said that it is impossible to convert the first levels of cache into a vertical cache, especially in a world in which processors have been multi-core for some time and the additional cache levels are used to communicate groups of cores with each other and even of different nature in some cases.

Performance on GPUs and other types of processors

In GPUs we have seen the implementation of the AMD Infinity Cache, which is a type of large cache that could be moved to the upper part of the GPU and separated from the main core. At the moment this is not giving considerable performance increases, but a vertical cache GPU would have a much greater capacity than the 128 MB of the Navi 21 GPU, add this to a more advanced manufacturing node and not being limited in terms of area and the amount of last level cache can go up to 512 MB and even 1 GB.

What would be special about a GPU with a 1 GB cache? With regard to Ray Tracing, tests have been carried out and it has been shown that the close location of certain data with respect to the GPU greatly increases the performance of these when processing scenes with ray tracing. So it is possible that future GPUs from NVIDIA, Intel and AMD will implement vertical cache to increase their performance in scenes with Ray Tracing, which will be increasingly common.

Another important market is that of flash memory controllers, which today make use of DDR4 or LPDDR4 memory chips as a cache. Implementing vertical cache in these processors would be sufficient to remove DRAM and make NVMe SSDs cheaper, as the cache will not require the use of DRAM memory.