Since the appearance of DirectX 8 the number of new types of shader has been increasing over time, the next to be included in the list are called Traversal Shaders, which have to do with the advances towards adoption with pause, but without ray tracing brake in games. Let’s see what it consists of and what improvements it will bring to the games in terms of performance.

Traversal Shaders are one of the two philosophies in order to pose the problem of the path of the spatial data structure in Ray Tracing, which consists of the use of shader programs to traverse the spatial data structure that represents the scene instead to use specialized or dedicated hardware.

First of all, let’s remember what a Shader is

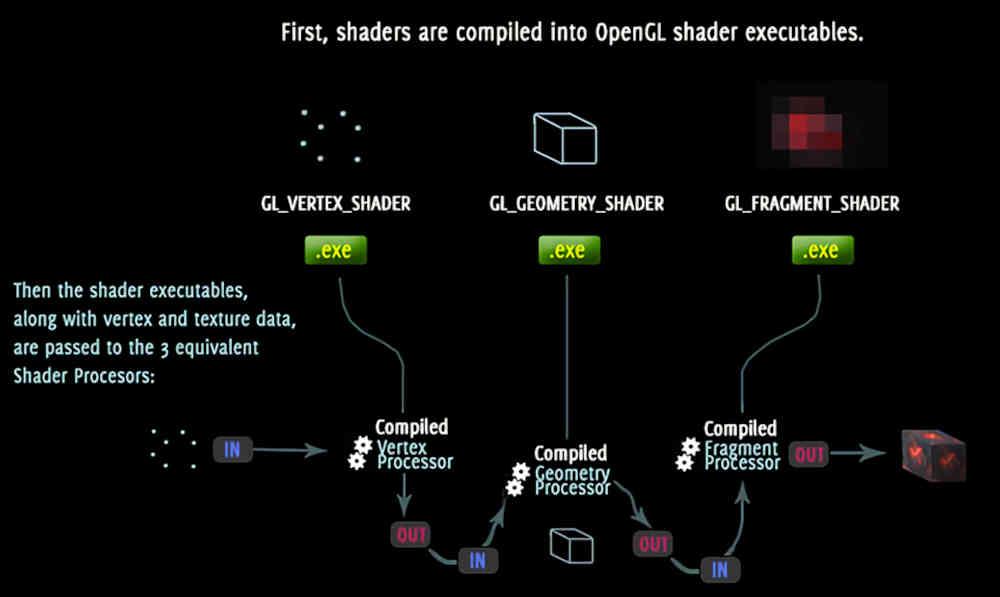

In software we colloquially know as a shader a program that runs on the cores of the GPU, which have different names depending on the manufacturer. So AMD calls these pieces of hardware Computer Units in its Radeon, NVIDIA calls them Stream Multiprocessors or SM in its GeForce and Intel gives them in its ARC names these units Xe Core.

So the shader itself is the software that runs on one of those units, which function very closely to a CPU, the difference is that a shader is a program that runs on a graphic primitive in the different stages render pipeline: vertices, primitives, triangles, shards, or pixels. Although these categories are nothing more than abstractions that we make, for the shader unit everything is data and this means that they can execute all kinds of programs.

So why not use a CPU? Well, due to the fact that there are problems that GPUs, being designed to operate in parallel, work much better when solving certain contexts than what a CPU does, and in the same way it happens in the case of GPUs.

Microsoft, DirectX Ray Tracing and its shaders

When Redmond first spoke about implementing ray tracing at the Games Developer Conference in 2018, there were a few months left until the launch of the NVIDIA RTX 20 and at that time it was completely unknown, at least publicly, of the There are units to accelerate Ray Tracing such as NVIDIA’s RT Cores and AMD’s Ray Acceleration Units.

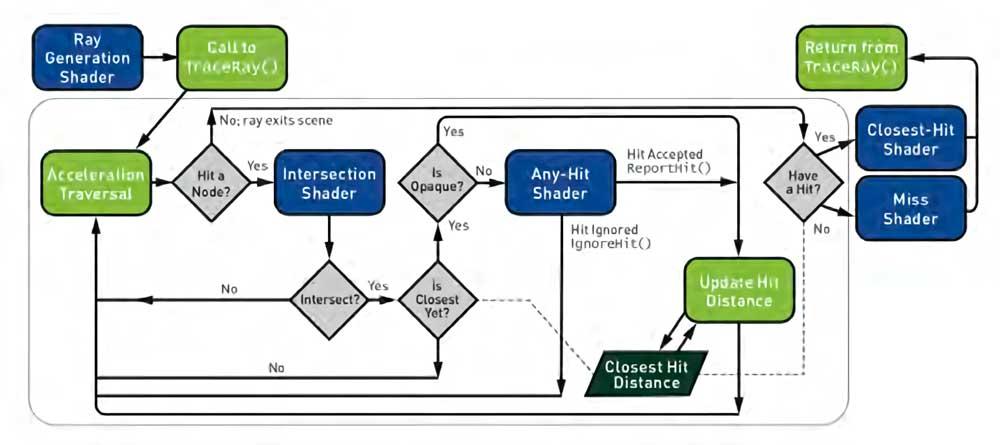

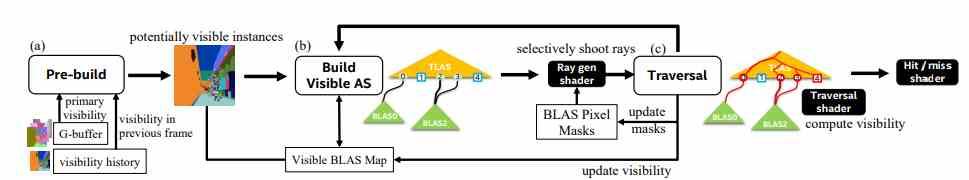

What was Microsoft‘s proposal to expand its multimedia API? Well, add a series of additional stages, which are defined in the following diagram:

Understanding the diagram is easy:

- The blocks in blue are shader programs that run at the GPU level.

- The blocks in green are executed in the CPU, driver, in tandem with the GPU.

- Diamonds in gray are conditions that can occur when lightning passes through the scene.

Now, in this diagram there is an element that is not included and it is for now one of the biggest problems that exist with regard to Ray Tracing: the path of the acceleration structure. And what is that? We’ve talked about it in our ray tracing tutorials, but it never hurts to remember.

Data structures to speed up ray tracing

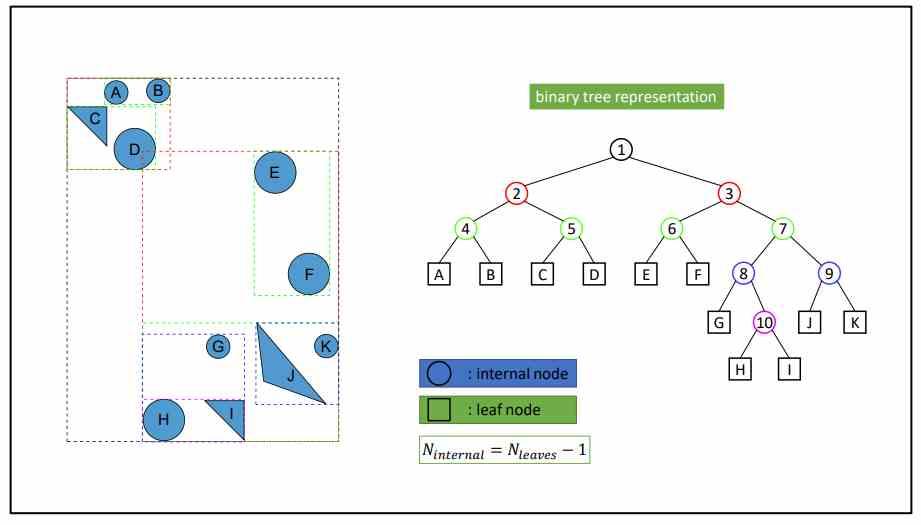

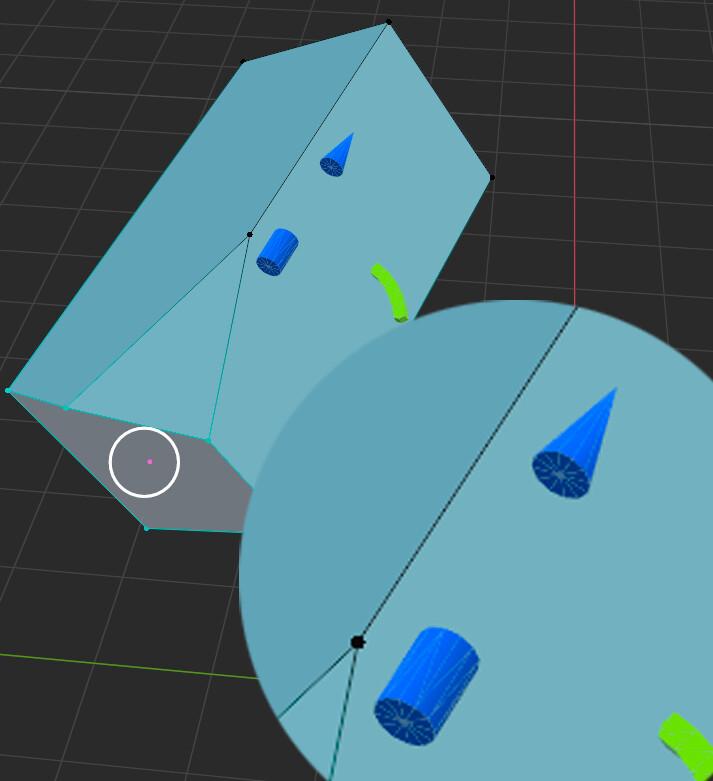

In order to accelerate, and therefore run faster, the ray tracing algorithms, what is done is mapping the position of the objects in the scene in a data structure, which is shaped like a binary tree than the GPU. would have to go.

In order for you to understand the process of going through the data structure, what is done is to start from the root that represents the entire scene and is specified by levels until reaching the last level. In each level what is done is to make a request to the RT Core or equivalent unit to calculate if there is an intersection, if there is one then it goes down to the next level, if there is not then that path stops completely. This is done until reaching the end of the tree, which is where the ray-box intersection is no longer made and the ray-polygon intersection is performed.

If you have been insightful, you will have seen that in the diagram of the previous section among the types of shader we have those of intersection, but not those in charge of traversing the BVH tree, that is, of traversing them, although it is understood that this task is performed by the units shaders although there is no specific type of shader for it.

The Traversal Shaders, what are they and what is their origin?

In the DirectX Ray Tracing documentation we can find among the future ones the so-called Traversal Shaders, which will be added in the future within the pipeline for Ray Tracing in a later version of the Microsoft API, but the best thing is to put ourselves in the situation.

The exercise of traversing the data structure so far despite the fact that it is a shader program, this is generic and is controlled by the graphics driver, so the programmers do not have to do anything as it is well understood that the traversal shader It gives the application code control to go through the process of traversing the data structure node by node.

And what benefits does this bring to performance? The main one is that we can define scenarios in which one or more rays are discarded before making the intersection, which is not possible at this time. A very clear example would be facing objects very far from the camera in which the lighting detail cannot be as appreciated as at close range. It must be taken into account that in the current version of the Redmond API we can define with regard to indirect lighting whether an object emits rays or not through the Ray Generation Shader, but we cannot create scenarios where we can rule out the rays by flight, especially with distance.

Traversal Shaders to build the spatial data structure

The Intel graphics R&D division presented in 2020 a document entitled Lazy Build of Acceleration Structures with Traversal Shaders and those of you who have a little command of the Shakespearean language will have deduced that it consists of the construction of the same spatial data structures by doing use of Traversal Shaders. So these can not only be used to control the course, but also to build it.

The first thing that stands out is Lazy Build, which we could translate being educated as a build with little effort. And what does it consist of? Well, what this technique seeks is that the construction time of the data structure is reduced. For this, it is based on previous information from previous frames added to a visibility algorithm and if this seems confusing, let us define what visibility means when we talk about 3D rendering.

We have to start from the principle that when a GPU is rendering what it does is calculate the visibility between a point in space and the first visible surface in a given direction or to simplify: the visibility between two elements. Before continuing we have to take into account one thing, surely when reading this you have imagined yourself looking at two objects. Well, the thing is not like that, we are talking about how one object would see another if it could see, but from the simplest definition it refers to the camera, which is the view from which we render.

In hybrid rendering that combines rasterization with ray tracing, which is now used by all games, the visibility of the camera is not calculated from said algorithm, but rather with the raster algorithm. The idea in the future is that the visibility with respect to the camera is made from Ray Tracing, so that with this information the GPU ends up building a data structure of the entire scene through the Traversal Shaders.