We continually see news that the number of elements of a processor, be it a CPU, a GPU or a heterogeneous system such as a SoC have increased the number of cores, and we believe that this is a fact. But the internal communication of the different elements of a processor is limited by an element that until recently has not been fully taken into account, energy consumption.

There is a cost that is usually obvious, the reason is because this cost does not appear to have a direct relationship with the number of calculations that a processor can perform or the number of elements in it, but in recent years it has become The number 1 problem for hardware architects and the one that is least talked about in the specialized media about computing, especially due to the fact that it is the elephant in the room, we are talking about the energy cost of transferring data within a processor.

Internal communication and data transfer

The simplest way to transmit data in one electronic system to another is through a transmitter and a receiver that transmit signal pulses continuously, the clock signal being the one that controls the compass of each bit transmitted to the receiver. as if it were a metronome. The cabling being the pathways through which these signals travel.

If we want to transmit data in both directions, we only have to place a transmitter and a receiver in each of the directions, and in the case that we want to transmit several bits in parallel we will only need to put a large number of transmitters and receivers.

Sounds simple doesn’t it? But we are missing one thing and that is the cost of transmitting the information from one part of the chip to another. It is as if they told us that a fleet of trucks can transport kilos of goods but suddenly someone would have forgotten the cost of fuel all this time due to the fact that it would have been totally marginal until a certain moment.

A Little History: The End of the Dennard Scale

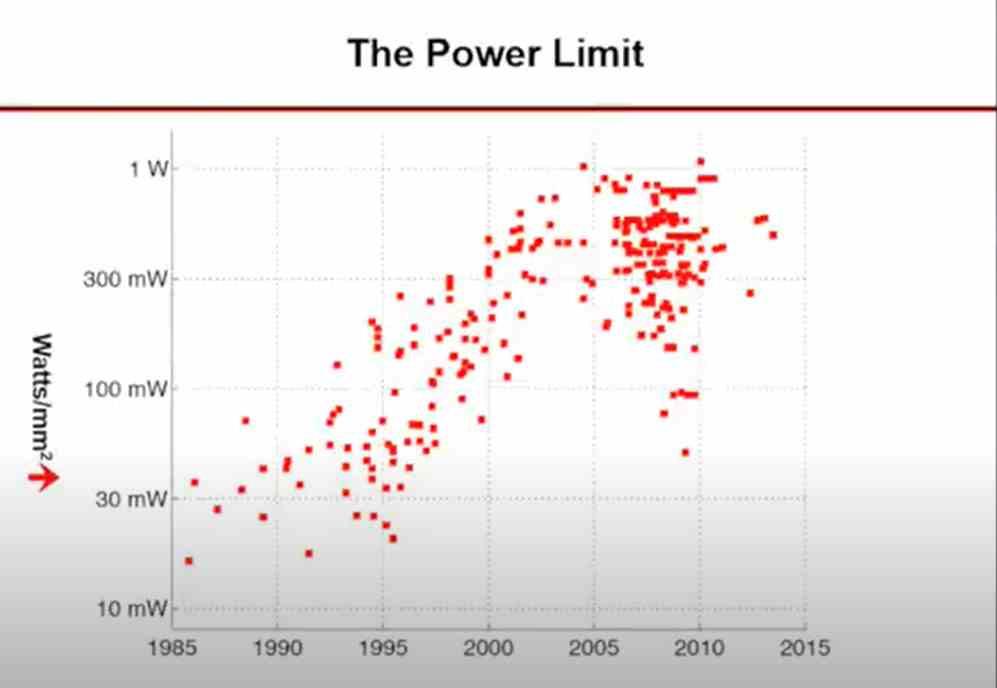

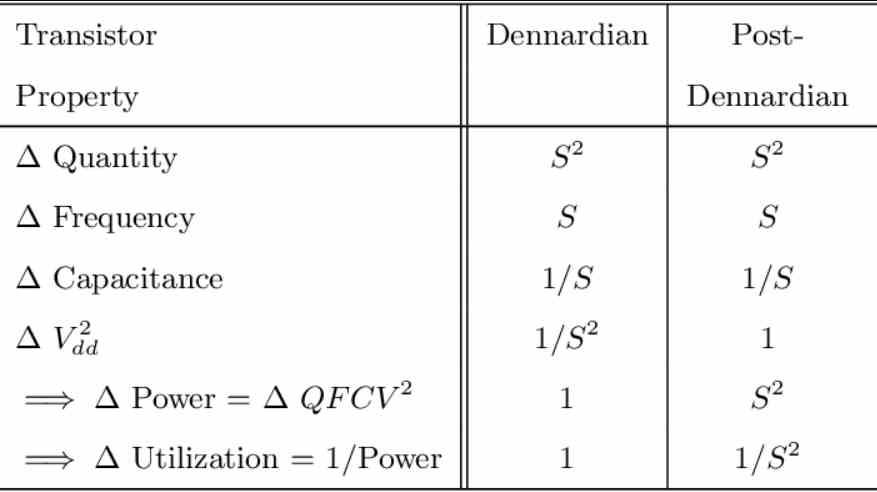

The end of the Dennard Scale that occurred in the mid-2000s, specifically when it reached 65 nm, but still has quite an important consequence today, its statement as a law can be summarized as follows:

If we scale the characteristics of one lithograph to another, manufacturing node, in the same way as the voltage, then what is the energy consumption per area should remain the same.

How was the end of the Dennard scale reached? Simply the designers of the different microprocessors scaled their designs much higher in what clock speed than they should and they hit a wall, which forced them to reverse the trend from 2005, the concept powers by watt started to appear everywhere on marketing slides as a new performance trend.

From that point on, the obsession of the engineers was reversed, they went from completely disregarding the energy consumption consumed to wanting to increase the number of operations per watt that a processor could do, but in the middle of this marketing the energy cost of the communication of data, due to the fact that for a long time the energy cost has been almost negligible, but for some time it has not been.

The bottleneck of internal communication

To understand the communication problem, we must bear in mind that when increasing the number of elements in a processor we are also increasing the number of communication channels necessary to communicate, which are always 2n where n is the amount of participants in the communication.

This causes that adding a greater number of elements in a processor also increases the communication channels and forces in order to keep the energy consumption stable to maintain a lower clock speed, the counterpart is that as we increase the amount of nuclei then we see how energy consumption does not remain stagnant but grows over time.

The interconnections in charge of communicating the different cores increase over time as we have more complex configurations and the amount of information they transmit and the energy they consume for it is increasing more and more, occupying more and more of the energy budget of the different processors, whether they are CPUs or GPUs, which poses a challenge, especially in GPUs, based on having tens and even hundreds of cores

So what is the problem of internal communication in the processors?

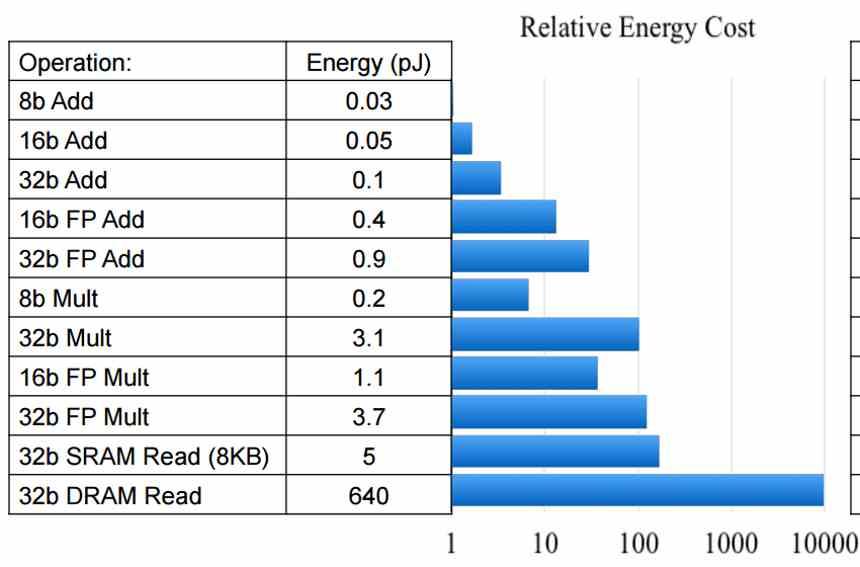

The problem that engineers now face is the fact that when doing a simple addition, the cost of adding the operands is a negligible cost compared to translating both operands, so the problem is no longer in making a simple ALU executing a data in its registers can reach a certain rate of performance, but that speed rate can be reached by making use of more remote data and therefore they will end up consuming more.

This means that designs that apparently would be possible on paper and what computing capacity is concerned have to be discarded once the logistics of the data and the energy consumed by it are studied.

How often does the energy consumed scale?

Regarding the energy consumption of internal communication, here we have to separate the energy cost into two different blocks, on the one hand we have the energy cost of computing, which followed the Dennard scale and has slowed down in recent years, but there is a constant progression that indicates higher performance.

On the other hand, we have the communication cost, this is not the communication cost between the RAM and the processor, but how much it costs to transmit a block of data in energy.

We cannot forget that both computing and data transfer are linked to each other. Poor communication will result in poor computing power and poor computing power will greatly waste an efficient communication structure.

The future is in infrastructure

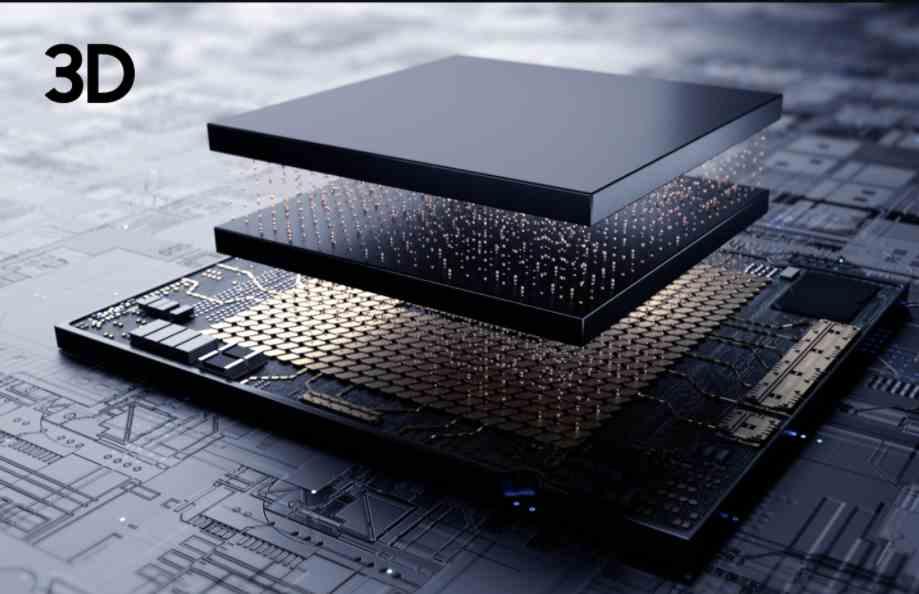

While they speak of increasingly faster processors, with more cores and therefore with greater power, all the meat on the grill is focused on improving energy efficiency when transmitting data, since it is the next neck bottle with which they are going to run into when increasing the performance of the different processors.

That is why we see new ways of organizing a processor in development, and they are becoming more common, the reason for this is that scaling a processor in the conventional way is no longer possible without the ghost of energy consumption appearing due to the transfer of data.

At the moment the monolithic models hold, but we do not know how far they can scale.